A new brain-computer has allowed a man with motor neurone disease (MND) to ‘speak’ again, according to a study.

The technology translates brain signals into speech with up to 97% accuracy, making it the most accurate system of its kind.

When somebody tries to speak, the new device transforms their brain activity into text on a computer screen, which the computer can then read out loud.

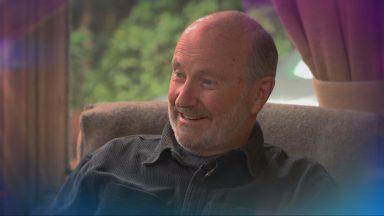

Researchers implanted sensors in the brain of a man with severely impaired speech because of amyotrophic lateral sclerosis (ALS), a form of MND, who “cried with joy” as he was able to communicate his intended speech.

The condition affects the nerve cells that control movement throughout the body, and leads to a gradual loss of the ability to stand, walk and use one’s hands.

It can also cause someone to lose control of the muscles used to speak, resulting in a loss of understandable speech.

Co-senior author of this study, neurosurgeon David Brandman from University of California Davis Health, USA, said the technology was “transformative”.

“It provides hope for people who want to speak but can’t. I hope that technology like this speech BCI will help future patients speak with their family and friends,” he added.

To develop the system, the team enrolled Casey Harrell, a 45-year-old man with ALS, in the BrainGate clinical trial.

Mr Harrell had weakness in his arms and legs, his speech was very hard to understand and he required others to help interpret for him.

In July 2023 sensors were implanted into Mr Harrell’s brain, in a region responsible for co-ordinating speech.

“We’re really detecting their attempt to move their muscles and talk,” explained Sergey Stavisky, an assistant professor in the Department of Neurological Surgery.

Mr Harrell used the system in both prompted and spontaneous conversational settings, and the speech decoding happened in real time.

The decoded words were shown on a screen and read aloud in a voice that sounded like Mr Harrell’s before he had ALS.

The voice was composed using software trained with existing audio samples of his pre-ALS voice.

At the first speech data training session, the system took 30 minutes to achieve 99.6% word accuracy with a 50-word vocabulary.

Dr Stavisky said: “The first time we tried the system, he cried with joy as the words he was trying to say correctly appeared on screen. We all did.”

In the second session, the size of the potential vocabulary increased to 125,000 words.

With just an additional 84 minutes of training data, the BCI achieved a 90.2% word accuracy with this greatly expanded vocabulary, and after continued data collection, the BCI has maintained 97.5% accuracy.

The findings were published in the New England Journal of Medicine.

Follow STV News on WhatsApp

Scan the QR code on your mobile device for all the latest news from around the country