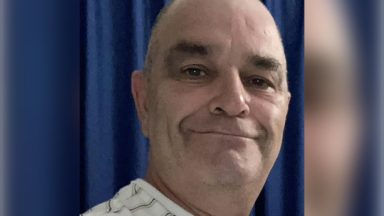

Murray Dowey, 16, took his own life after becoming the victim of online sextortion. His parents have launched a lawsuit against the internet giant Meta

The parents of a 16-year-old boy who took his own life after he became the victim of online sextortion have launched a lawsuit against the internet giant Meta.

Lawyers for Murray Dowey’s parents claim the trillion-dollar company “prioritised profit,” resulting in missed opportunities to protect teenagers in the years leading up to his death.

Scammers used the popular social media app Instagram, owned by Meta Platforms, Inc., to target Murray in December 2023.

The teenager, from Dunblane, was contacted on the platform by someone claiming to be a young girl, but was actually a scammer. He was tricked into sending an intimate image, which was then used to blackmail him.

Murray took his own life just hours after the interaction – he was 16 years old.

The legal action, initiated through the Social Media Victims Law Center (SMVLC) on behalf of Mark and Ros Dowey, is thought to be the first of its kind by a family in the UK against Meta. The couple is named in the lawsuit, alongside a mother in the US whose teenage son also took his own life after a sextortion attempt.

The lawsuit says Meta failed to adopt “expected” safety features, all the while recommending teen Instagram users to “sextortionists who Meta itself already had identified as predators.”

It also mentions that “Meta’s choice to allow known criminal misconduct” was for the purpose of “safeguarding its engagement metrics.”

Sextortion, or sexual extortion, is when intimate images and/or videos are recorded and used for financial exploitation and coercion; It is mostly committed by organised criminal gangs overseas.

According to the Internet Watch Foundation, cases of sextortion have soared by 72% over the past year, and almost all of those confirmed cases are of boys.

Perpetrators often target young people on popular social media apps before threatening to send the images to friends and family unless a sum – often thousands of pounds – is paid.

The lawsuit alleges Instagram knew teens like Murray were at risk due to a lack of robust privacy settings for young people, and that Meta “prioritised profit over protecting vulnerable teen users from sexploitation.”

The complaint includes an email sent to Instagram’s CEO, Adam Mosseri, from Chief Information Security Officer Guy Rosen in 2018, which appears to highlight concerns about adult-minor interactions on the platform by seemingly describing a hypothetical situation. It was sent five years before Murray’s death.

Email sent to Instagram’s CEO Adam Mosseri outlined in court filings

“Someone looks me up on Instagram/Facebook because I’m a cute girl, sends me a message (or even a friend request) and we start messaging.

“That is where all the bad stuff happens – From the ‘less bad’ tier 2 sexual harassment like dudes sending d*** pics to everyone; to the tier 1 cases where they end up doing horrible damage.”

In theory, Murray would have fallen into what Meta’s Chief Information Security Officer described in 2018 as ‘Tier 1’, which ultimately resulted in Murray taking his own life.

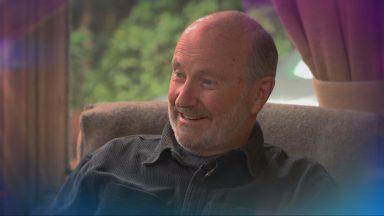

Asked how he felt after reading the email, Murray’s dad said: “Anger. Pure anger. They could have fixed this. They put money before kids’ lives… Murray got caught up in it, and unfortunately, he’s gone.”

The lawsuit goes on to outline what the SMVLC label prioritises: “Profit over protecting vulnerable teen users from sexploitation”.

Court filings reference an internal Meta analysis from 2019, which estimated there were 3.5 million profiles “conducting inappropriate interactions with children,” via Instagram’s direct message function.

According to the court documents, later that year, Meta went on to conduct research on account privacy, which recommended that all teen accounts should be in private mode by default.

This was to ensure communications are between connected accounts only, to prevent unwanted interactions from strangers.

The following year, Meta’s growth team ultimately decided that the changes would result in a “loss of 1.5 million monthly active teens a year”, which would cause a “problem with engagement and growth”, and months later determined that the changes would hit the teen audience globally by 2.2%.

When researchers were informed of the initial findings by the team, they allegedly asked: “I[s]nt safety the whole point of this team?” To which another responded: “The point of this team is to prevent another… article about how s***** instagram is.”

It wasn’t until 2021 that Meta began defaulting new accounts for under-16s to private, claiming “wherever we can, we want to stop young people from hearing from adults they don’t know or don’t want to hear from.”

Lawyers for the Dowey’s argue that because Murray already had an account on Instagram, he was not defaulted to private.

At the time, Meta did state that teens would be prompted to consider private accounts, but could still opt to remain public.

Matthew Bergman, Founding Attorney of SMVLC, told ITV News: “What happened to [Murray Dowey] was not an accident, it was not a coincidence. It was a foreseeable consequence of Meta’s design decisions to prioritise profits over safety and to maximise engagement by connecting young, vulnerable users to predatory adults…

“Meta was well aware of the sextortion phenomenon years before this young man was even contacted by an extortionist… that is the kind of callous disregard of basic human dignity and basic human decency, that we believe gives rise, to this lawsuit…”

Bergman’s claims are echoed in his complaint, which says that up until the end of 2023 Meta “were

recommending minors to potentially suspicious adults and vice versa”… and that this feature “was responsible for 80% of violating adult/minor connections.”

Meta updated its private-by-default settings to all teen accounts in September 2024, including under 18s and has since introduced its Teen Accounts Programme, while taking “steps to protect users from online predators.”

Meta has previously responded to claims of teens’ exposure to harmful content online, saying: “The full record will show that for over a decade, we have listened to parents, researched issues that matter most, and made real changes to protect teens – like introducing Teen Accounts with built-in protections and providing parents with controls to manage their teens’ experiences.

Meta created Teen Accounts in 2024. The main features include: limits to sensitive content, defaults any user between 13 and 18 into an account that is automatically private, turns off notifications at night, and doesn’t allow messaging from unconnected adults.

Meta has previously said in response to Teen Accounts: “We know parents are worried about their teens having unsafe or inappropriate experiences online, and that’s why we’ve significantly reimagined the Instagram experience for tens of millions of teens with new Teen Accounts.”

When ITV News initially investigated sextortion in 2024, Meta said it does not tolerate any kind of abuse on its platforms.

Murray’s mum, Ros, told us: “We always suspected it was profit over safety, but I think there’s now clear evidence that they knew how flawed and faulty their products were by design, they knew that children were dying, and they chose to just continue anyway.

“Even though their safety team were recommending really quite cheap and simple fixes that would completely reduce the number of unwanted direct messages and connections between predators and young boys, especially, and they chose profit over that.”

“I know what we’re taking on, we’re taking on one of the biggest global organisations, bring it on – somebody has to stand up to them,” she added.

Murray’s dad said: “We’ve hit rock bottom with losing [Murray] and you know… we’ve got nothing to lose. We can’t back away from this fight.”

ITV News contacted Meta about the case.

The company responded with this statement: “Sextortion is a horrific crime. We support law enforcement to prosecute the criminals behind it and we continue to fight them on our apps on multiple fronts.

“Since 2021, we’ve placed teens under 16 into private accounts when they sign up for Instagram, which means they have to approve any new followers.

“We work to prevent accounts showing suspicious behavior from following teens and avoid recommending teens to them. We also take other precautionary steps, like blurring potentially sensitive images sent in DMs and reminding teens of the risks of sharing them, and letting people know when they’re chatting to someone who may be in a different country.”

How to get help if you have been affected by the issues mentioned in this article:

- Revenge Porn Helpline – Helpline: 0345 6000 459 (Over 18’s)

- Childline – Helpline: 0800 1111 (Under 19’s)

- CALM (Campaign Against Living Miserably) – Helpline: 0800 58 58 58

- MIND provides advice and support to empower anyone experiencing a mental health problem. Information line: 0300 123 3393

- Samaritans is an organisation offering confidential support for people experiencing feelings of distress or despair. Phone 116 123 (a free 24 hour helpline).

Follow STV News on WhatsApp

Scan the QR code on your mobile device for all the latest news from around the country